DeepSeek V3.2 Is a Reminder: Build Flexibility Into Your AI Strategy Now

Open-source models are catching up faster than you think

DeepSeek just dropped V3.2 and its high-compute variant “Speciale,” both fully open source under an MIT license. The benchmarks are striking, but the bigger story isn’t about who’s “winning.” It’s about what this means for how you should be thinking about AI strategy.

The Numbers That Matter

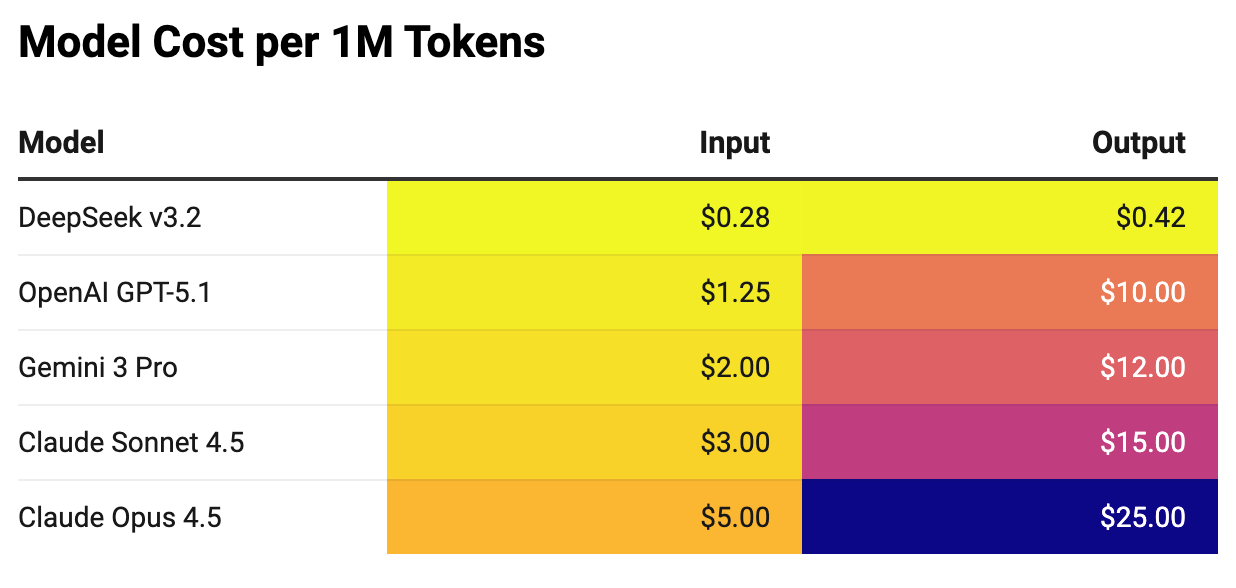

Let’s start with costs, because this is where it gets real:

For a workload generating 1 million output tokens per month, that’s a 20-30x cost differential.

The performance? DeepSeek V3.2-Speciale scored 96.0% on AIME 2025 (advanced math) versus GPT-5 High’s 94.6%. On Terminal Bench 2.0 (coding workflows), DeepSeek hit 46.4% compared to GPT-5’s 35.2%. The model achieved gold-medal performance in the 2025 International Mathematical Olympiad and International Olympiad in Informatics.

They achieved this partly through DeepSeek Sparse Attention (DSA), an efficient attention mechanism that cuts compute on long-context tasks while preserving output quality.

This is an open-source model matching or beating frontier closed models on key benchmarks at a fraction of the cost.

We’re Still Early. Act Like It.

Here’s what gets lost in the “who’s ahead” debates: we are still in the early, volatile phase of AI development. Today’s pricing won’t be tomorrow’s pricing. Assumptions about market structure haven’t settled.

According to Epoch AI research from March 2025, LLM inference prices are dropping between 9x and 900x per year depending on the benchmark, with a median of 50x per year. Since January 2024, that median rate has accelerated to 200x per year.

We’ve seen this pattern before. In early 2021, open-source databases overtook commercially licensed databases in popularity for the first time, according to DB-Engines. Oracle, which once dominated enterprise databases, now sits at about 12% developer usage. The premium option didn’t disappear, but it went from default choice to niche option as open alternatives matured.

AI is moving faster than databases ever did. DeepSeek caused market chaos in January with their release of R1, went quiet for better part of a year while OpenAI shipped GPT-5 (then 5.1) and Anthropic released their Claude 4.5 family of models. DeepSeek comes back hard with their release yesterday.

The Cost Nuance

There’s an important caveat. Right now, many teams chase the frontier, upgrading to GPT-5 or Claude 4.5 as soon as they ship. This means they’re not capturing the cost reductions at the capability tier they actually need. You keep paying premium prices because you keep upgrading to premium models.

But this won’t last forever. At some point, “good enough” really is good enough for most business use cases. When teams stop reflexively chasing the latest model and instead match capability to need, the dramatic cost reductions we’re seeing will finally flow through to the bottom line.

The question is whether your architecture will be ready to take advantage of it.

The Case for Model Agnosticism

I’ve talked with various product and engineering leaders over the last 6 monsh, and building model flexibility has become a consistent priority. In my own side projects, I always use OpenRouter, a routing layer that lets you switch between models without changing your integration.

Tools like OpenRouter, Chutes.ai, and self-hosted options like LiteLLM aren’t fringe anymore. They’re becoming standard infrastructure for teams that smartly want optionality.

This isn’t about abandoning Claude or GPT. It’s about recognizing that when the landscape moves this fast, flexibility has real value:

Vendor leverage: Viable open-source alternatives give you negotiating power, even if you never switch.

Cost optionality: Having the ability to route workloads to cheaper models as capability gaps narrow is valuable as pricing continues to shift.

Use-case matching: Not every task needs a frontier model. Route complex reasoning to GPT-5, handle simpler tasks with DeepSeek (or another open source model).

…so what?

The AI landscape is more dynamic than the past few months suggested. Just because closed-source models have been making impressive strides doesn’t mean open source stopped innovating.

The practical takeaway is straightforward: if you haven’t already, focus on building flexibility into your AI stack now. Not because you need to switch today, but because the cost of that flexibility is low and the optionality is valuable in a market that’s still finding its shape.

We’re early. The model that’s best today may not be best in six months. The pricing that seems fixed today will look very different soon. Build accordingly.