Did Docker Just Solve MCP’s Two Biggest Problems?

The speed of this ecosystem is the real story

Run this command and you’ve given a stranger access to your machine:

npx -y @some-random/mcp-server

That’s how most MCP servers get installed today. No sandboxing. No verification. Just arbitrary code execution with whatever permissions your terminal has.

Docker’s blog calls it what it is: developers “making a dangerous trade-off: convenience over security.”

For teams expecting to run MCP servers in trusted environments, this is usually a non-starter. You can’t have engineers pulling random servers the way they might install npm packages for a side project. The blast radius is pretty much unlimited.

But trust isn’t the only blocker.

The Context Bloat Problem

Anthropic recently acknowledged what power users have been experiencing:

“Today developers routinely build agents with access to hundreds or thousands of tools across dozens of MCP servers. However, as the number of connected tools grows, loading all tool definitions upfront and passing intermediate results through the context window slows down agents and increases costs.”

Connect 25 MCP servers and your agent loads hundreds (or even thousands?) of tool definitions before doing anything useful. That’s slower inference, higher costs, and worse performance—the lost in the middle problem means models struggle to use tools buried deep in massive context windows.

“Just make the context window bigger” doesn’t solve this. You’re still paying for tokens you don’t need and degrading the model’s ability to find what matters.

Docker Built What Anthropic Proposed

Anthropic describes solutions like “progressive disclosure”: loading tool definitions on-demand rather than upfront. They suggest a search_tools capability so agents can find relevant tools without loading everything.

Docker’s Dynamic MCP implements exactly this:

mcp-find: Search the catalog for MCP servers by name or descriptionmcp-add: Add a server to the current session on-demandmcp-remove: Remove servers you no longer need

Instead of pre-configuring every MCP server before starting a session, agents discover and add servers during the conversation. The context window contains only what’s actually being used ✨.

Trust Through Containerization

For the security problem, Docker’s MCP Catalog layers trust through containerization plus curation.

Docker-built servers get the full treatment:

Cryptographically signed images

Complete provenance and SBOM (Software Bill of Materials) metadata

Continuous security maintenance

Community-built servers still run containerized:

Isolated with restricted resources (1 CPU, 2GB memory)

No host filesystem access by default

Clear labeling distinguishing them from Docker-maintained options

This isn’t blind trust. It’s trust with verification and blast radius limits. For teams, the Docker-built servers provide production-level confidence. For individuals, containerization limits the damage even a malicious server could do despite potentially lower stakes.

The Pace Is the Point

Here’s the timeline:

November 2022: ChatGPT launches

November 2024: Anthropic launches MCP

March 2025: OpenAI adopts MCP

November 2025: Docker ships Dynamic MCP with containerization and curation

MCP is 13 months old. We already have what feels like unlimited MCP servers, major competitors on the same open standard, and now at least one option for production infrastructure addressing the protocol’s biggest limitations.

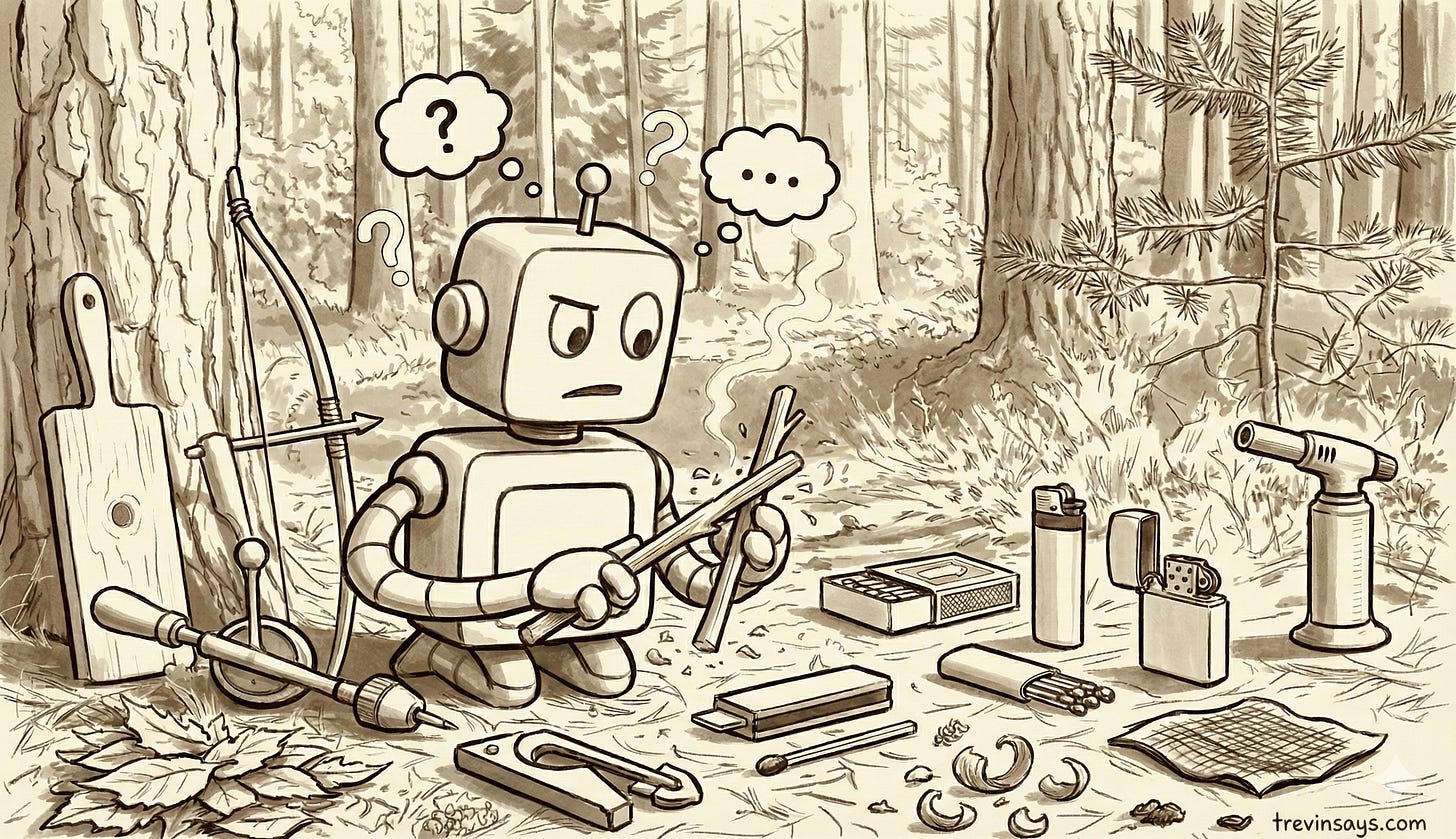

We’re still rubbing sticks together to make fire, but we went from sticks to matches to lighters in a year.

The AI we use today is the worst it will ever be but that’s not criticism. It’s the most optimistic thing you can say about where we’re headed.

The Takeaway?

I don’t think Docker’s Dynamic MCP is the whole story. What stands out more to me is just how quickly real problems are finding solutions in this new age of building with AI.

If you’ve been waiting because MCP felt too risky or too unwieldy for serious use, the blockers are starting to fall. Worth paying attention.